~ Problem ~

If we only have a few Docker containers on our local dev machine, then it is not too cumbersome to (re)use the domain-name "localhost" and ports 80/5432/3006/...

But when we start running several different projects' containers at the same time we quickly find ourselves juggling non-standard ports or stopping one project to start another.

This is because Docker, by default, will bind port-mappings to IP 0.0.0.0, which means listening on the given port on all network interfaces.

This results in the computer not being able to re-use the same port for another application. I.e., if we are running a container mapped to 0.0.0.0:1234, then we cannot start another container on port 1234 (on any interface).

~ Solution ~

We want to run many containers (web servers, databases, etc.) - and allow them to be mapped to the same ports. We also want to (optionally) assign domain names to our containers. We want a solution that works in any local application, with any ports/protocols, without configuring socks-proxy, port-forwarding or ssh-tunneling.

Give each container (or project) it's own IP

The easiest way to allow for overlapping ports is to give each container port-mapping its own IP-address. But how many IP-addresses do you have pointing to your machine? Say hello to 127/8.

In fact, all addresses from 127.0.0.1 to 127.255.255.254 point to your local machine.

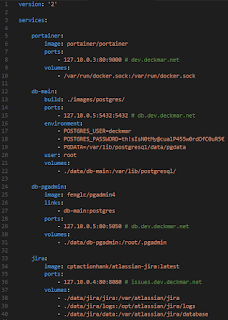

So, let's bind our port-mappings to a suiting local IP address. Docker allows up to do this by prefixing with the IP-address it should bind to on the host. The port syntax is the same in docker run and docker-compose.

In this example the address-space 127.10.x.x is used for containers. Note that the same IP can be re-used as long as there are no overlapping ports. This is useful for a database + web database administration combo.

Make a neat tree of domain names

Now since our containers have their own IP, we can start using DNS to access them in a human-friendly way. The easiest way is to make a sub-tree in a public DNS we control.

As an alternative to buying/managing our public DNS (only ~ 20€/year, https://eurodns.com), we can instead use free wildcard DNS services like nip.io or xip.io. They respond with the IP provided in the domain-query.

Or, if we feel like taking the blue pill, we can run our own DNS in a container.

http://www.damagehead.com/blog/2015/04/28/deploying-a-dns-server-using-docker/

With this approach we can conjure a whole TLD for our containers, ex: www.projectname.jode

~ Summary ~

This solution (tested on Windows and Linux) takes away port-mapping dilemmas and introduces the ability to use DNS.

A few things to note:

- If any other application listens to 0.0.0.0:80, then it will not be possible to listen to ex. 127.10.2.1:80. A common gotcha is that Skype (Preferences > Advanced > Connection) does this, which can be disabled.

- Using 127.x.x.x addresses only works locally. Even though the domain-names look very shareable, they are not.

- To expand this pattern to work between multiple machines, the host needs to have a (virtual) interface for each external IP, and the network routing for the range needs to be set-up accordingly.

Lägg sedan ditt namn i kommentaren, t.ex "rsa-key-John", och (om du vill) ett lösenord som måste skrivas för att nyckeln ska kunna användas.

Lägg sedan ditt namn i kommentaren, t.ex "rsa-key-John", och (om du vill) ett lösenord som måste skrivas för att nyckeln ska kunna användas.